Analyzing UEFI firmware (AVF part 2)

By Leet on 2025-03-24 13:44:39

After my last post, I did some more experimenting with the EFI implementation provided. Recall the issue: Windows refuses to boot inside Apple's virtualized firmware. In the previous post, I mentioned that it could be related to the firmware not implementing the UEFI Graphics Output Protocol (GOP) correctly. I also said that Windows is booting. But it seems that both are not the case. In this post we will look at why the first statement is false, and how to analyze this in more depth.

Legend for AVF blog series

- Part 1: Basic experimentation and intro to AVF

- Part 2: Analyzing UEFI firmware (this post)

- Part 3: Analyzing Windows booting + pure luck

- Finale: Enabling the GPU

Analyzing the issues

Linux works fine

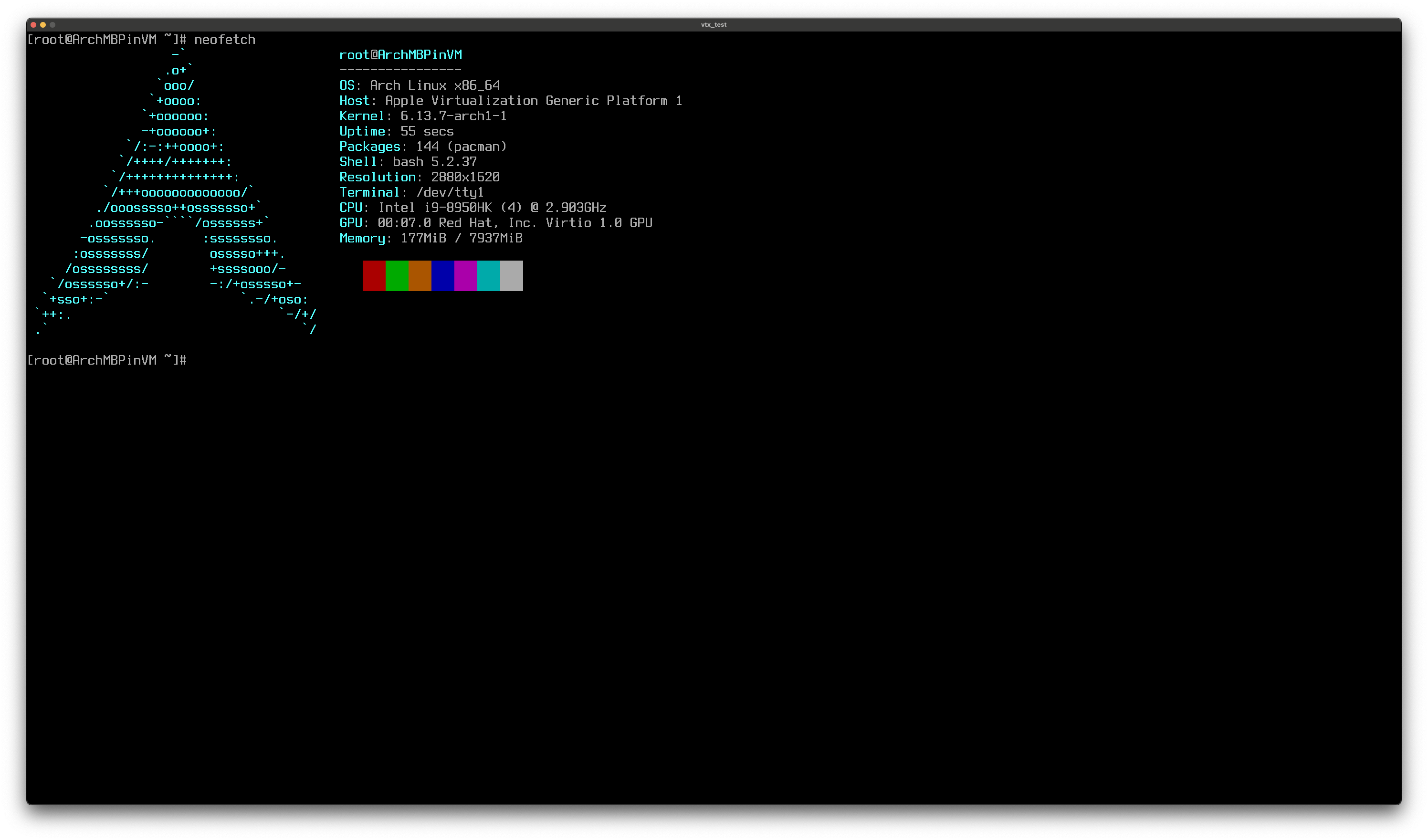

The first clue which tells us that GOP is working as expected is that Linux boots fine. More specifically: in early booting stages, Linux uses the EFI framebuffer for output. When booting Arch, at no stage is the display bugged and/or empty. This indicates that the EFI frame buffer (allocated by GOP) is working fine.

Windows can boot headless

You might have never tried this before, but Windows can boot up fully headless. That is: without a keyboard, mouse, or display. Even if GOP would not behave correctly, the system should boot fine. Unlike Windows 7, Windows 10 does not require INT10H support and/or any graphics during boot.

To determine whether Windows was actually booting, I created a VM without a keyboard/mouse/display with Windows 10 installed configured to make a HTTP request to a local server on boot. This way, I could detect if the operating system loaded successfully. As a control test, I ran this in QEMU(/HVF), with a very similar configuration. Both use the same disk image (Windows 10 22H2 + Virtio Guest Tools) I also repeated the test 5 times on each VM to be sure.

The results? Well, as expected, QEMU boots up properly every single time. Contrary to what I thought though, Windows 10 was not booting inside the AVF virtual machine. I waited 5 minutes for each test to ensure it was not just being slow, but I got zero requests on the server.

Maybe it is UEFI?

My first immediate thoughts were that it was caused by the barebones EFI implementation (as I said in my previous post, I did not even think it was UEFI). So I started digging a little bit. I tried booting with Quibble, an alternative boot loader for Windows, but that did not even try to load. Not surprising, considering it does not even load in QEMU. I also tried booting through rEFInd, which although unsuccessful, brought me to a useful debugging tool: The UEFI Shell.

Some testing with the shell

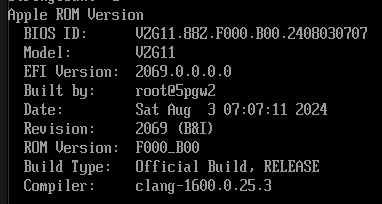

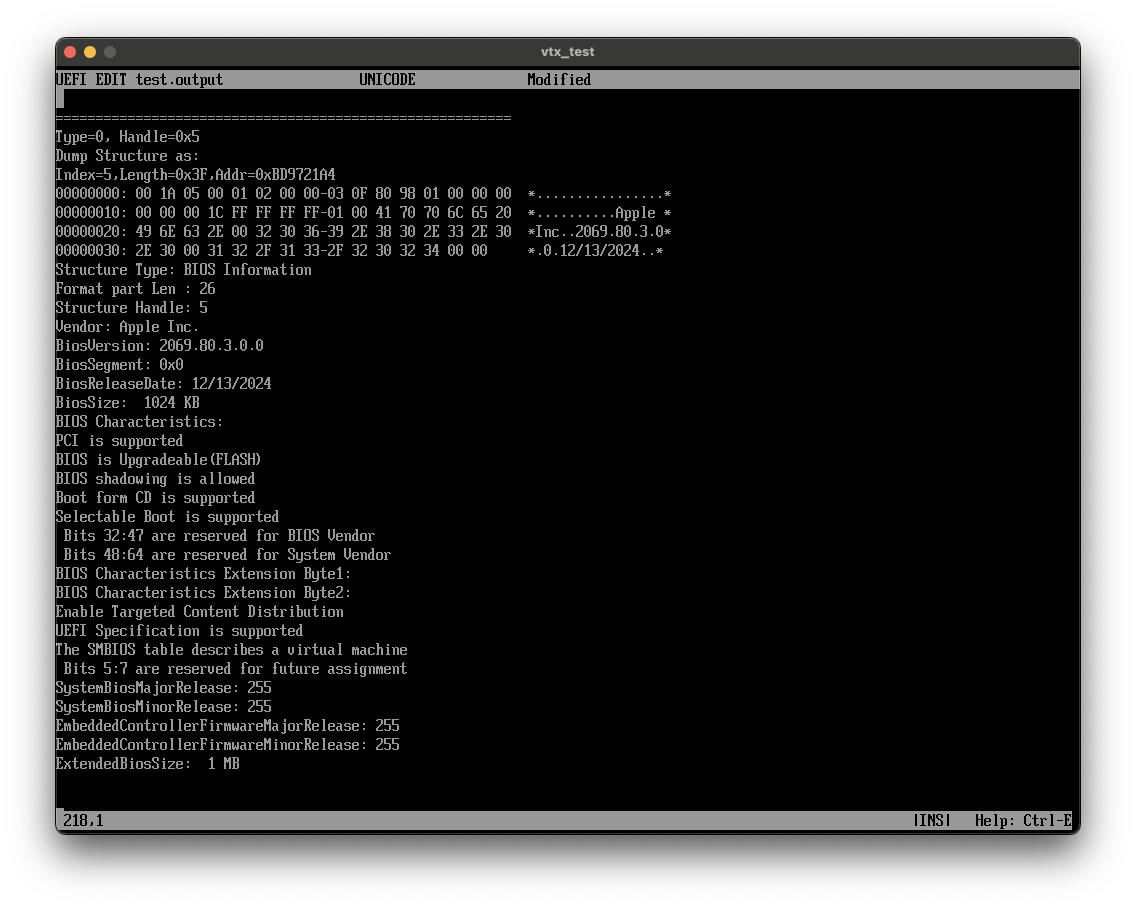

Once I was in the UEFI shell, I was curious about how much information I could retrieve from the firmware. So I started randomly running some commands to see what I'd get. I expected the (at this point not yet U)EFI implementation to be very very bare bones. But after some digging in SMBIOS, I discovered something interesting:

(Note: There was a host firmware update between these images)

This is the same firmware as on Intel T2 Macs. In fact, this firmware version is precisely the same as the one from the host system (2018 15" MacBook Pro). This could mean one of two things:

- AVF's firmware is updated with the OS and is built from the same source as normal Apple firmware.

- Apple is spoofing the BIOS version in the VM. I have no reason to believe either over the other. I'm hoping more analysis will help me decide.

More controlled testing

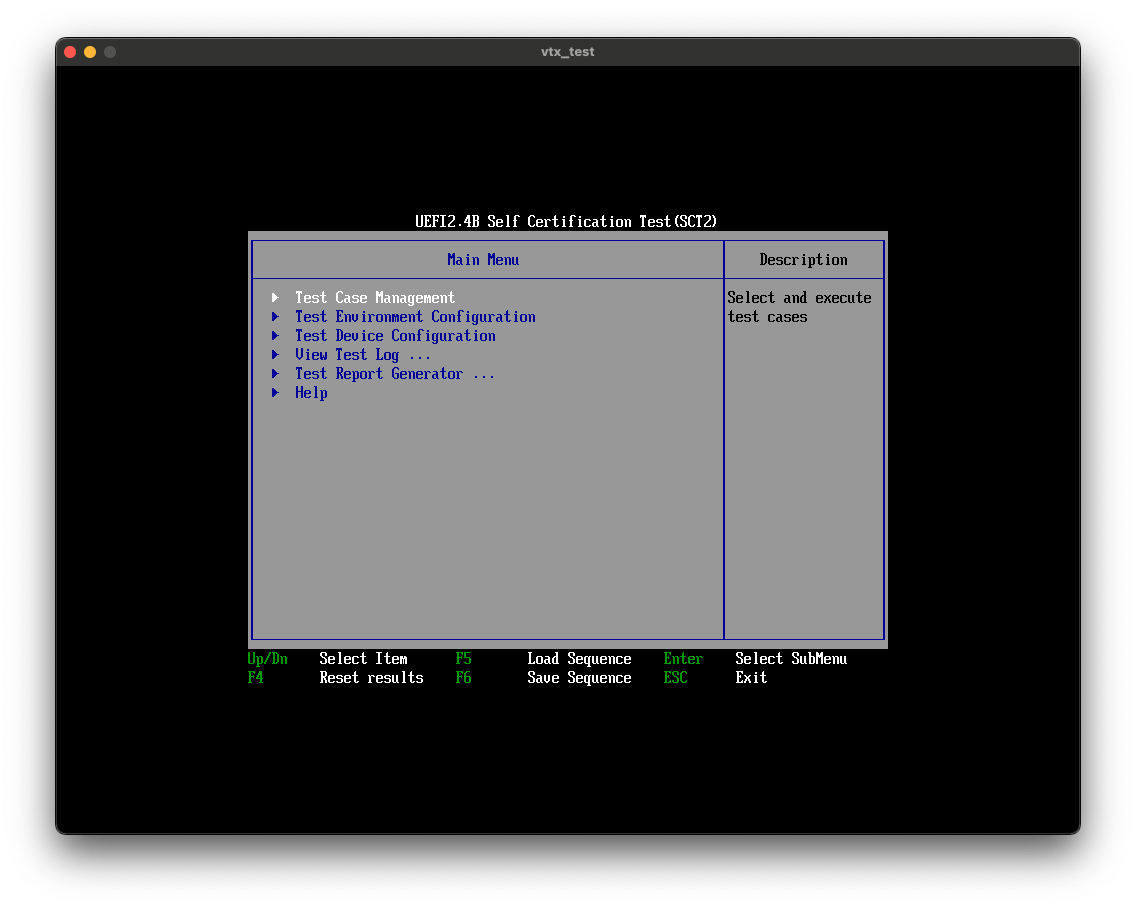

So I looked up tools for testing UEFI firmware implementations. I came across the UEFI Self-Certification Test (SCT). This is a testing suite designed by the UEFI Forum for exactly this purpose: testing if firmware conforms to UEFI specifications. I initially chose version SCT 2.3.1C, as UEFI 2.3.1 is what Microsoft recommends. But I could not get that version running at all, so I settled on SCT 2.4B.

Self-Certification test

UEFI SCT is actually very simple. It is a set of EFI binaries that you can put on any FAT32-formatted partition/volume/disk/usb drive and boot through an EFI shell. When you have loaded SCT, you can select a subset of tests you want to perform and press F9 to run it. You can also load/save preconfigured test sequences. The tests are very in-depth: each test has subtests, which can have more subtests which all have 10-15 functions they test. Overall, the average test sequence tests thousands of UEFI capabilities.

My test sequence

The goal is to create a testing sequence for the elements of the UEFI specification that Microsoft claims Windows uses. I will not go into the details here, but the test sequence I made and used is available on GitHub (see end of page). I am going to perform the test both in the guest and host system. According to SMBIOS, I should get the same test results, since they are the same firmware. But I already know they are not since Windows does not boot in the guest.

- If the test results are different, then I can confirm that Apple is spoofing firmware

- If a test fails on the host, I can disregard it on the guest, because Windows does not need it to boot on the host.

- I will then look at the test results for the guest to see what is missing from the firmware (if anything)

First review

Firstly I want to say that I massively underestimated the firmware used in AVF virtual machines. It is a good implementation of the UEFI specification. But not entirely of course. Some interesting findings:

- Graphics Output Protocol exceeded my expectations. Not only is it implemented, it is implemented fully according to specification. So my hunch about GOP was indeed false.

- The same test sequence had a different amount of total tests on different platforms. On my MacBook, there were 7673 tests. In the VM only 4926 tests were run. This skews the results if no cleaning is done. So in the data analysis, only tests that ran on both platforms will be taken into account.

- Even taking into account that the results were skewed, the host had A LOT more failed tests. I kind of expected this already, since the SCT GUI did not even load on the host, I had to run the test through the command line.

- Apple is definitely spoofing the firmware version in the VM. I am not aware of any benefits to doing that, but the firmware in the VM is vastly different from the one used in Intel Macs.

Detailed result info

After running the test, SCT can generate a .csv file. This file contains:

- An overview of the tests ran (Table:

”Service\Protocol Name","Total","Failed","Passed”) - A list of failed tests + locations to their log files (Table:

”Service\Protocol Name","Index","Instance","Iteration","Guid","Result","Title","Runtime Information","Case Revision","Case GUID","Device Path","Logfile Name") - A list of passed tests (Table:

"Service\Protocol Name","Index","Instance","Iteration","Guid","Result","Title","Runtime Information","Case Revision","Case GUID") - System info (Raw, unformatted output of the following commands:

map -v,memmap,pci,ver, anddh -v)

Data analysis

To get a better look at the results, I performed data analysis. I mainly did this with Anaconda, Jupyter Notebook, and JetBrains DataSpell, since I am familiar with that environment. For both the guest and host platforms, I loaded in two tables: the test overviews and the failed and passed tests concatenated. These can be concatenated because their columns match. Each test runs multiple times. For all unique Test GUIDs, I selected the first data entry from each dataframe and set Result to FAIL if ANY of the tests with the ID failed.

In total, 2182 unique tests were run in both runs. Even though the sequence used was the same, SCT decided to perform a different amount of tests depending on the platform. SCT of course also runs test multiple times, so the actual amount of tests performed is greater. But for the research for this post, I was only interested in the results of unique tests.

Graphs

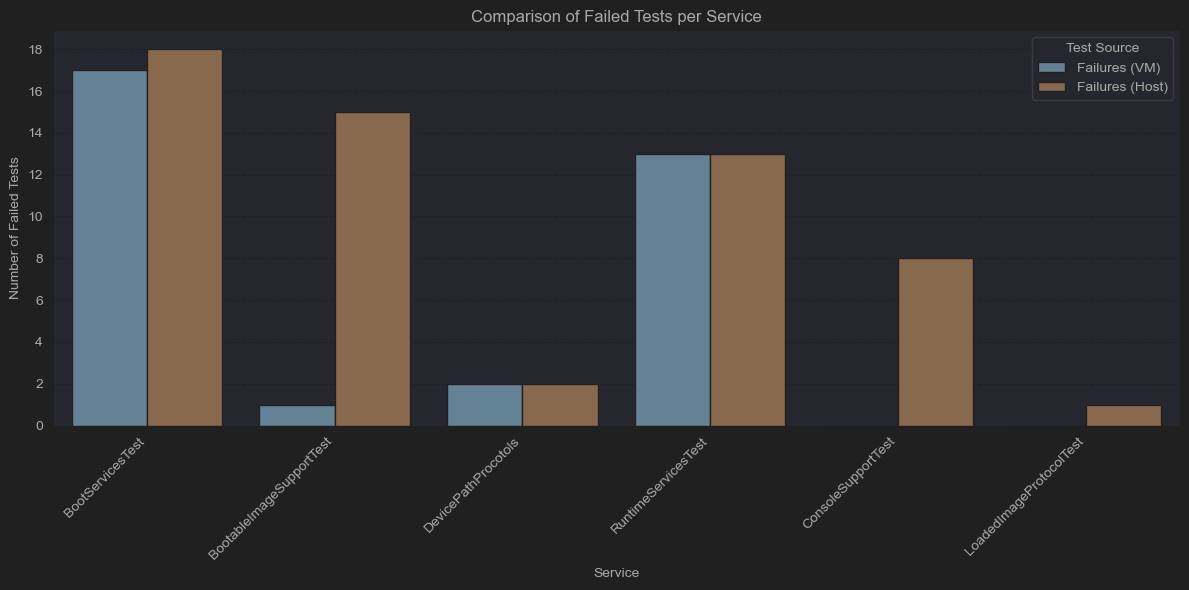

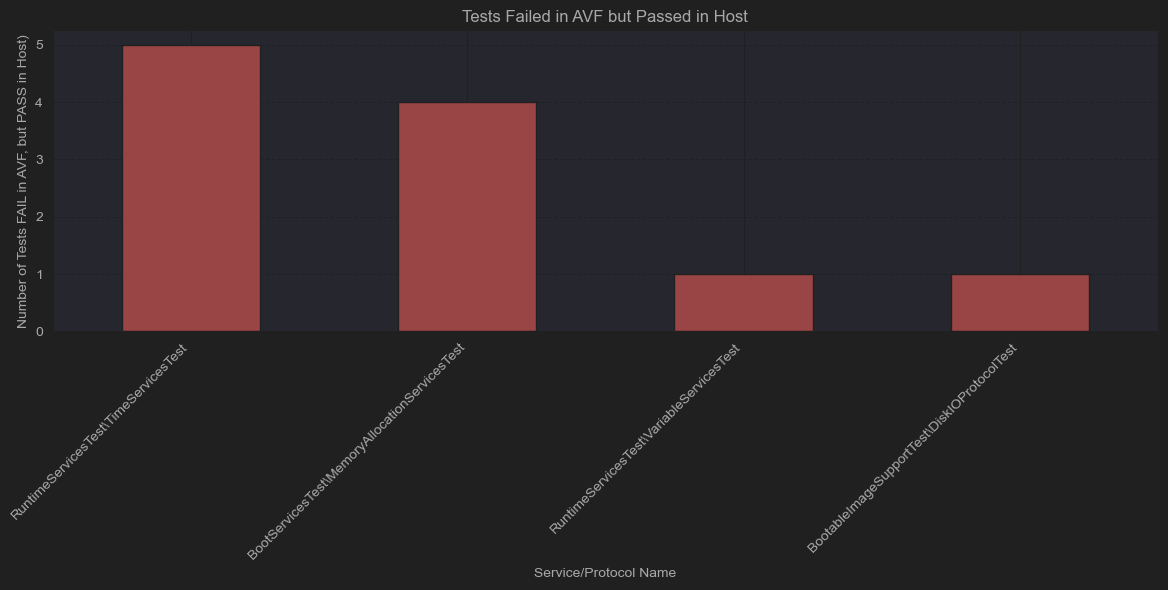

I then started graphing the mined data. To get a quick overview of the data, I created a bar plot for the number of failures in each service for both runs:

SetVariable, SetTime, SetWakeupTime, ReadDisk, AllocatePool, AllocatePages

These may seem like critical functions (allocating memory, timers, reading from the disk), but the tests that failed are extremely specific. Remember that each function has 15+ tests, so having an odd one fail is not deal-breaking.

Conclusion

Apple Virtual Framework’s UEFI implementation is solid. I think it might be directly based on OVMF. The Windows kernel is probably loading but failing to initialize. UEFI services are no longer available at that point, so the issue does not lie in the firmware. In part 3 I attempt to do kernel debugging. Or at least, until I make a discovery. Stay tuned.

GitHub

I have uploaded the UEFI SCT test sequence, the outputs and the python code used to parse the results on GitHub. I have only omitted the system info from the .csv generated by SCT for my host system, as it seems to contain info about my network adapters.